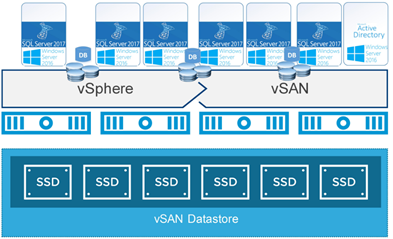

Recently, I decided to perform AD migration from 2012R2 to 2019 in my lab, and follow the blow, migrate all services (DFS, SQL and others) to 2019. I decided to use the new feature that appeared in vSphere 6.7U3, i.e. support for RDM (SCSI-3 PR), and run the new Microsoft Windows Server Failover Cluster on this. As Piszki Lab is already two physical servers, such a cluster has more sense, of course it is only over the lab. There is also official VMware documentation describing the whole process, in addition, you can use the detailed instructions for placing WSFC.

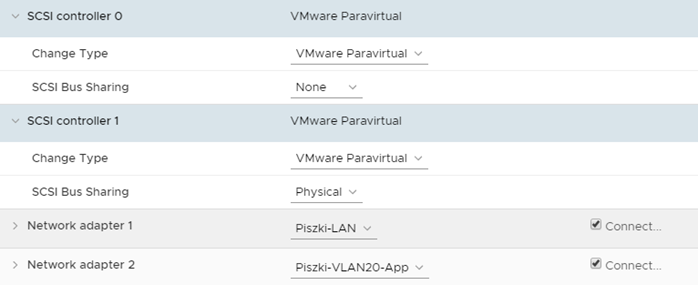

The whole process is not complicated, it is even simpler than traditional RDM from FC SAN. We start with the preparation of two machines (or more) with Microsoft Windows Server 2019 (versions up 2012 are supported). In my case, template 2019 has the VBS option enabled, i.e. Virtualized Based Security, this forces the use of UEFI instead of Bios and the inclusion of IOMMU and virtualization extensions, but in conjunction with TPM 2.0 it offers interesting possibilities in the field of VM security (but more on that at other times). As for the version of the virtual machine’s equipment, it must be version 14, we add a new VMware Paravirtual disk controller and set the SCSI bus sharing to Physical.

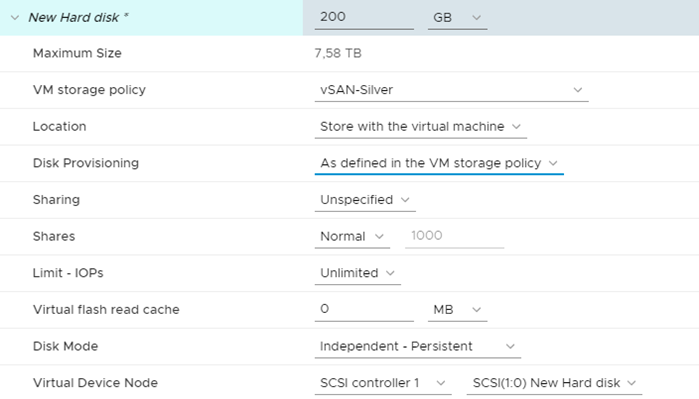

In the next step, on the first node of the cluster, we add a new disk, select the vSAN policy and the disk controller created earlier, we set Disk Mode to Independent – Persistent.

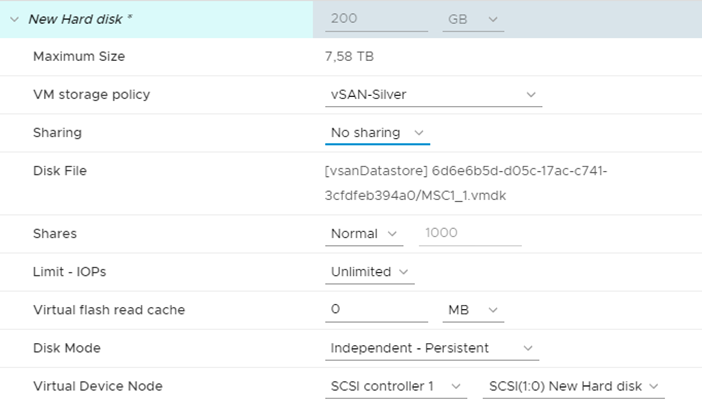

On the second node of the cluster, we add an existing disk with similar settings.

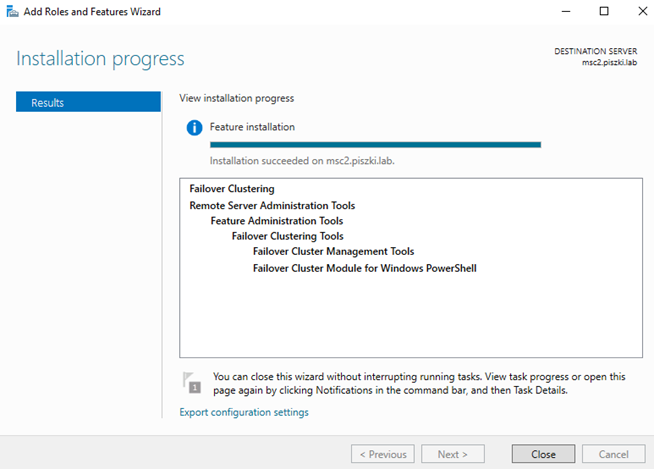

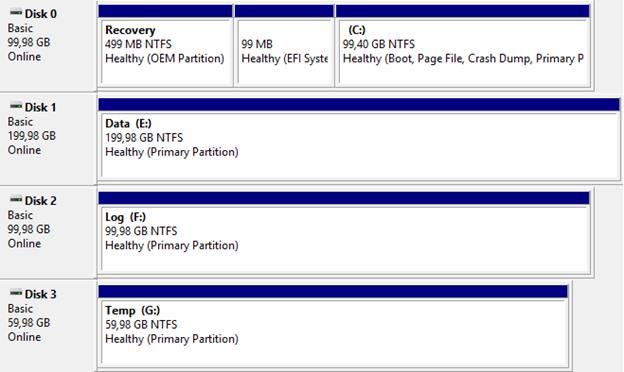

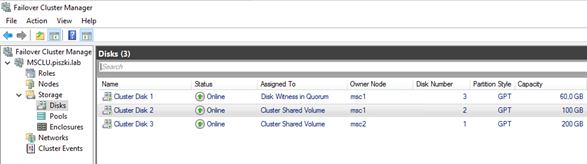

We add more disks in a similar way, in my case they were three 200GB, 100GB and 60GB disks under the MsSQL cluster (but it is better to start with one, otherwise a random disk will be used as a quorum, this can also be changed later of course). Next, we perform standard configuration steps for the WSFC cluster, on both nodes we install the Failover Clustering feature (+ restart).

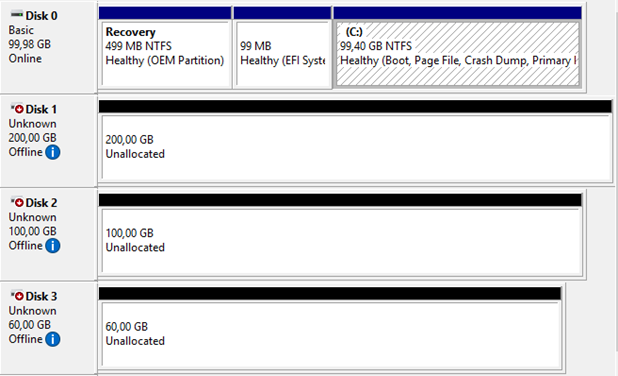

After restart on the first node we configure the disks, on the second they should be displayed as Offline.

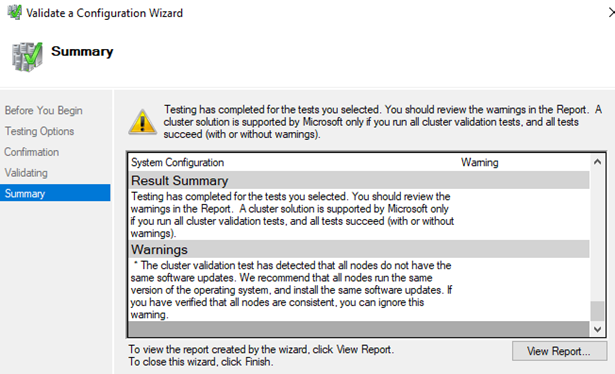

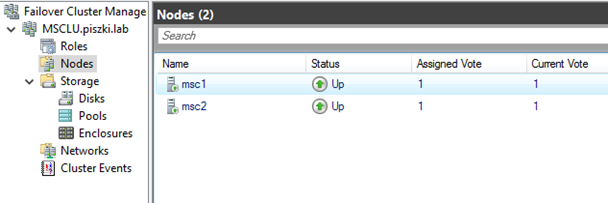

We run the Failover Cluster Manager console and check if all settings are ok, the most important thing that interests us is whether it passed the SCSI-3 PR test.

In the next step, we create a standard cluster, all connected drives should show as ready to act as a Cluster Shared Volume.

And basically everything, as you can see in the attached pictures, is a very simple solution, we will learn soon whether it is suitable for production systems. It is worth noting that this solution has been available in VMware Cloud on AWS for some time.

2 Comments

Leave a reply →